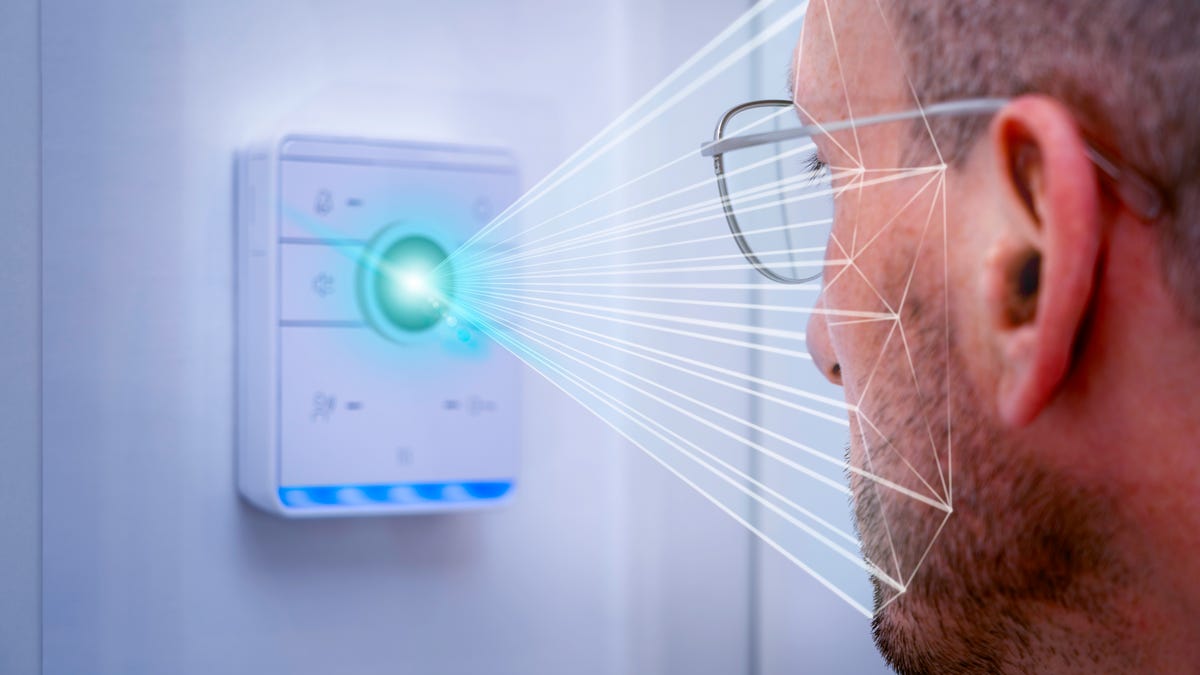

The Federal Trade Commission (FTC) is stepping up its scrutiny of a technology company that claims its AI-powered facial recognition system is free from bias. This investigation follows growing concerns over the ethics of artificial intelligence and the potential for discriminatory practices in facial recognition technology. As the issue of AI bias continues to dominate conversations in both the tech industry and government circles, the FTC’s actions raise important questions about transparency, accountability, and the regulation of emerging technologies.

FTC Investigation: A Response to Growing Ethical Concerns in AI

The Federal Trade Commission’s inquiry into the AI company underscores the increasing attention being paid to the ethics of facial recognition technology. The company in question has marketed its AI-driven system with a bold claim: it is capable of recognizing faces without bias. However, experts have long warned that facial recognition systems are inherently prone to inaccuracies, particularly in their ability to correctly identify individuals from marginalized groups. This is especially true when systems are trained on datasets that lack diversity or are not properly evaluated for accuracy across different demographics.

Despite the company’s claims, the FTC’s investigation highlights the broader regulatory challenges surrounding AI technologies. The commission’s focus on these concerns comes at a time when multiple stakeholders—from governments to advocacy groups—are questioning the potential risks and fairness of AI applications in sensitive areas such as law enforcement, hiring practices, and surveillance.

The Controversy of “Zero Bias” Claims

The assertion of “zero bias” is particularly controversial because bias in AI models is often subtle and can go unnoticed until the technology is deployed in real-world scenarios. While the company has advertised its facial recognition software as being free from bias, external audits and independent researchers have found that even the most advanced AI systems can still perpetuate harmful disparities. These discrepancies are usually linked to factors such as race, gender, and age, leading to skewed outcomes in identifying or misidentifying individuals.

Some of the most notable concerns with AI-powered facial recognition include:

- Racial and Gender Bias: Studies have shown that facial recognition systems tend to have higher error rates for people of color, especially women of color. This can result in wrongful identification or failure to recognize individuals.

- Data Quality and Representation: Many AI systems are trained on data that lacks sufficient diversity. This lack of diversity in training datasets is a major contributing factor to the bias in facial recognition systems.

- Unintended Consequences: The implementation of biased facial recognition software can have harmful social consequences, including racial profiling and discrimination in public spaces.

Real-World Examples of Bias in AI

Numerous studies have documented the problems inherent in facial recognition technology. In 2018, the National Institute of Standards and Technology (NIST) conducted an extensive evaluation of facial recognition software, revealing that some commercial systems were less accurate when identifying African American and Asian faces compared to Caucasian faces. This disparity was particularly stark in gender classification, where the systems showed a higher rate of misidentification for women of color.

Furthermore, organizations such as the ACLU and the Algorithmic Justice League have long advocated for greater transparency and accountability in facial recognition technology. Their campaigns have highlighted incidents where these systems were used in law enforcement and public surveillance, leading to wrongful arrests, racial profiling, and violations of privacy rights. In response to such concerns, several cities and even some countries have moved to ban or severely restrict the use of facial recognition by both public and private entities.

The Role of Transparency and Accountability in AI Development

At the heart of the FTC’s investigation lies a critical question: how can companies ensure that AI systems are both transparent and accountable in their decision-making processes? Transparency is a key factor in building trust with the public, as well as in mitigating the risks of bias and discrimination. The challenge is that AI models, especially those based on deep learning techniques, often operate as “black boxes,” meaning their decision-making processes are not easily understood or explainable by humans.

In response to calls for greater transparency, some companies and organizations have started implementing more rigorous testing and auditing procedures. For example, Microsoft and IBM have pledged to release more detailed information about the performance of their AI systems, including data about accuracy rates across different demographics. Additionally, several organizations are calling for the creation of standardized benchmarks to assess AI fairness, which would allow for more consistent comparisons across different systems.

Accountability and Regulation: Moving Toward a Framework

While some companies are taking steps to address these issues voluntarily, experts argue that comprehensive regulation is essential to ensure that AI technologies are developed and deployed ethically. The lack of uniform regulations across the U.S. has created a fragmented landscape in which companies are largely self-regulated, with varying degrees of transparency and accountability.

In Europe, the General Data Protection Regulation (GDPR) and the proposed Artificial Intelligence Act have set important precedents for AI governance. These regulatory frameworks emphasize the need for transparency, fairness, and the minimization of bias in AI systems. By establishing clear guidelines for the responsible use of AI, Europe has become a global leader in AI regulation, providing a model that other countries, including the U.S., may look to adopt.

The Broader Implications for AI Ethics

The scrutiny of the facial recognition company by the FTC is just one example of the ongoing debate surrounding AI ethics. As AI systems become increasingly integrated into various industries, the question of how to balance innovation with fairness and safety becomes ever more urgent. In particular, the use of facial recognition in law enforcement raises concerns about privacy, civil liberties, and the potential for systemic discrimination.

At the same time, the rapid development of generative AI and machine learning algorithms is opening up new opportunities and challenges. Whether in healthcare, finance, or entertainment, AI is poised to revolutionize many sectors. However, without proper oversight, these technologies could exacerbate existing inequalities or introduce new risks.

In the context of facial recognition, the broader conversation also touches on issues such as surveillance and individual rights. Critics argue that the pervasive use of facial recognition in public spaces could lead to a “surveillance state,” where individuals’ movements and actions are constantly monitored. This creates a conflict between the potential benefits of AI in enhancing security and the fundamental right to privacy.

Looking Forward: The Path to Ethical AI

The growing scrutiny over AI systems, such as facial recognition technology, signals a pivotal moment in the development of artificial intelligence. For companies in the tech industry, the message is clear: claims of “zero bias” or “perfect accuracy” are no longer acceptable without rigorous evidence to back them up. Transparency, accountability, and ethical considerations will be paramount as these technologies continue to evolve.

As the FTC investigation unfolds, it will likely serve as a case study for other companies seeking to build AI systems that are both innovative and socially responsible. The outcome of this inquiry could set important precedents for how AI is regulated, ensuring that future technologies are developed with fairness and inclusivity at their core.

Ultimately, the development of ethical AI will require a collaborative effort among technology companies, government regulators, academics, and civil society. By fostering open dialogue and taking proactive steps to address the risks associated with AI, we can help ensure that these powerful technologies are used in ways that benefit society as a whole.

For more information on AI regulations and their impact on technology, visit ACLU’s Privacy and Technology section.

See more Future Tech Daily