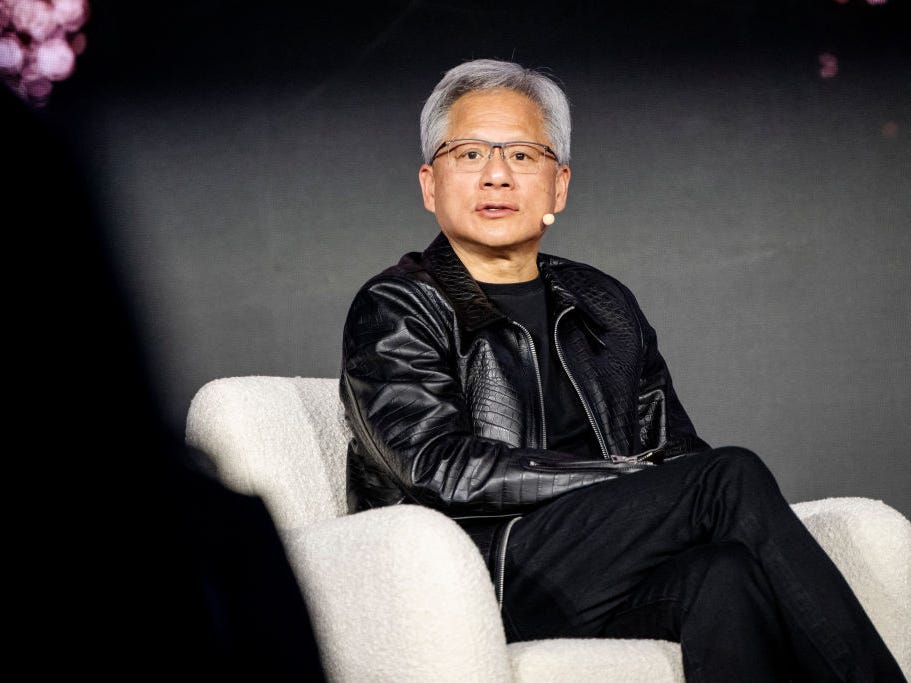

Jensen Huang, the CEO of Nvidia, has long been one of the leading voices in the artificial intelligence (AI) industry. His company, Nvidia, has been at the forefront of revolutionizing AI hardware and software solutions, making it a key player in powering next-generation technologies. In a recent interview, Huang discussed the progress and challenges in the AI field, offering a candid perspective on why fully trustworthy AI systems are still years away.

The Long Road to Trustworthy AI: A Vision of Progress and Caution

Artificial intelligence has made remarkable strides in recent years, with companies and industries across the globe increasingly integrating AI-driven solutions into their operations. From chatbots to autonomous vehicles, AI has proven its potential to solve complex problems and streamline processes. However, despite these advances, Nvidia’s CEO Jensen Huang warns that we are still far from creating AI systems that can be entirely trusted. In fact, he states that it may take several years before AI can be considered safe and reliable enough to make crucial decisions on behalf of humans.

This caution comes at a time when AI technologies are being deployed in high-stakes industries, including healthcare, finance, and law enforcement. The implications of AI failures in these sectors could be disastrous, leading to potentially catastrophic consequences for individuals, organizations, and societies at large. Huang’s insights underscore the importance of responsible AI development, ensuring that these systems are not only powerful but also safe, ethical, and transparent.

The Current State of AI: What Needs to Be Improved?

At present, AI is capable of impressive feats, such as predicting health outcomes, automating customer service, and even creating art. However, Huang points out that AI systems still lack the consistency and understanding that would make them completely trustworthy. Here are some of the key areas where AI still has significant gaps:

- Bias and Fairness: AI models can inherit biases from the data they are trained on, which can lead to discriminatory outcomes. This has been a concern in fields like hiring, lending, and criminal justice, where biased algorithms could inadvertently perpetuate inequality.

- Transparency and Explainability: Many AI systems, particularly deep learning models, operate as “black boxes.” Their decision-making processes are not always clear, making it difficult to understand how and why certain conclusions are reached.

- Accountability: Determining who is responsible when an AI system makes an error remains an unresolved issue. As AI systems become more autonomous, there is a growing need for clear frameworks that define accountability for their actions.

- Safety and Security: AI systems are vulnerable to adversarial attacks, where malicious actors manipulate data to mislead the system. Ensuring the security and robustness of AI systems is essential, especially in high-risk environments like autonomous driving.

While AI has made significant progress in these areas, overcoming these challenges requires sustained effort, interdisciplinary collaboration, and rigorous testing. As Huang notes, AI systems must be able to demonstrate not just functional capabilities but also trustworthiness, which will take time to develop.

The Evolution of Trustworthy AI: Moving Beyond Perfection

One of the key challenges in AI development is the tension between striving for perfection and ensuring a gradual, safe rollout of technology. Huang highlights that AI systems don’t need to be flawless to be valuable, but they must be reliable enough to make decisions with minimal risk. Overcoming issues such as bias, transparency, and safety will require a combination of technical advancements and ethical considerations. As AI technology evolves, one of the critical components of trustworthiness will be its ability to adapt to new situations and learn from past mistakes without making harmful errors.

In many cases, trust will be built incrementally. For example, rather than relying on a single AI system to make critical life-or-death decisions, AI may be deployed in tandem with human oversight, creating a hybrid system that balances computational power with human judgment. This approach could significantly reduce the risks associated with fully autonomous systems while still allowing AI to offer valuable insights and assistance.

Regulation and Ethical Standards: Shaping the Future of AI

The development of trustworthy AI will also require robust regulatory frameworks and ethical standards that guide its deployment and use. As Huang suggests, the pace of AI development is outstripping the creation of regulations that can ensure safety and fairness. Governments and industry leaders are working to address this gap, but the complexity of AI technologies makes it challenging to develop comprehensive regulations that can keep up with innovation.

Some countries and regions are already taking steps to establish ethical AI frameworks. The European Union, for example, has introduced the Artificial Intelligence Act, which aims to set clear rules for the development and use of AI across various sectors. The United States, meanwhile, is considering a more industry-driven approach, with technology companies like Nvidia, Google, and Microsoft taking a leading role in shaping AI ethics through self-regulation and voluntary guidelines.

Despite these efforts, Huang emphasizes that true regulation must be flexible enough to account for the rapid pace of innovation in AI. While hard-and-fast rules are important, they should not stifle progress or prevent new technologies from being explored. Instead, regulators should work alongside researchers and developers to create a framework that allows for both innovation and accountability.

AI and Human Collaboration: The Path Forward

While there is no doubt that AI has enormous potential, Huang argues that it is important to remember that AI is not meant to replace humans, but rather to augment human capabilities. AI systems can help to automate tedious tasks, identify patterns in data, and support decision-making, but human expertise and oversight remain essential. Rather than seeing AI as a threat to jobs or human autonomy, Huang believes that AI will become a tool that empowers people to achieve more than ever before.

In fact, the collaboration between AI and human intelligence will likely be the key to creating trustworthy AI systems. As AI becomes more integrated into everyday life, it will be essential for humans to understand how these systems work, how they make decisions, and how to intervene when necessary. By developing AI with human-centered design principles and fostering a culture of transparency and ethical responsibility, the industry can ensure that AI remains a force for good in society.

Conclusion: The Road Ahead for Trustworthy AI

In conclusion, while Nvidia’s Jensen Huang is optimistic about the future of AI, he also urges caution when it comes to trust and reliability. The development of trustworthy AI systems will be a long journey, requiring continuous improvements in transparency, fairness, accountability, and safety. As the AI landscape evolves, stakeholders must work together to ensure that AI technologies are not only powerful but also ethical and secure.

The road to trustworthy AI is undoubtedly challenging, but with the right combination of technical expertise, regulatory oversight, and ethical commitment, the AI industry can overcome these hurdles and create systems that benefit all of humanity. It is clear that AI is not just a tool for the future—it is already shaping our world, and its role will only grow more prominent in the years to come. The ultimate goal is to develop AI that can be trusted, relied upon, and safely integrated into society, and that journey is one that will take time, collaboration, and continued innovation.

Related Reading:

Nvidia’s AI Research

See more Future Tech Daily