Navigating the Ethical Boundaries: What Questions Should ChatGPT Avoid?

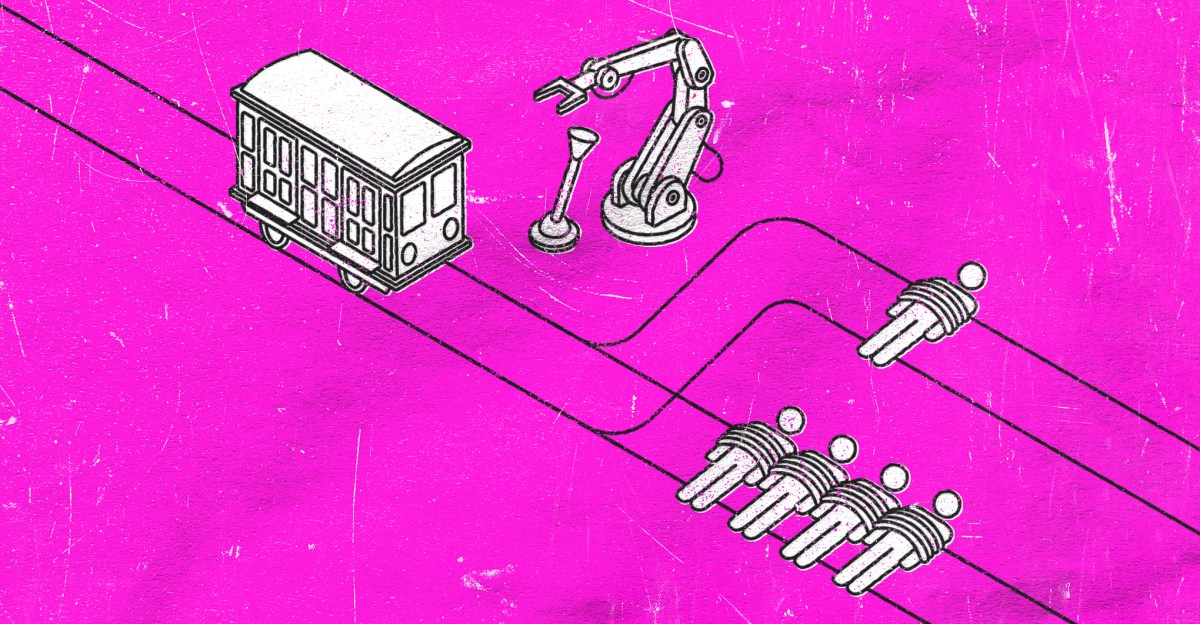

As artificial intelligence continues to weave its way into the fabric of our daily lives, one pressing question emerges: which inquiries should remain off-limits for AI systems like ChatGPT? Understanding the ethical boundaries of AI interaction is crucial, especially given the sensitivity of certain topics. This article delves into the ethical considerations and potential pitfalls associated with AI responses, aiming to clarify what we should steer clear of when engaging with AI technologies.

The Importance of Ethical AI

The rise of AI brings with it a myriad of ethical dilemmas. As tools like ChatGPT become more integrated into personal and professional settings, the responsibility for their ethical use falls not only on developers but also on users. Ethical AI is essential for ensuring that technology serves humanity positively and does not contribute to harm or misinformation.

AI systems can generate responses that imitate human conversation, but they lack emotional intelligence, moral reasoning, and the nuanced understanding of human experiences. This gap can lead to inappropriate or harmful interactions if not carefully managed. Thus, recognizing what questions ChatGPT should avoid is a vital step in promoting ethical AI use.

Categories of Questions to Avoid

When navigating the ethical boundaries of AI, several types of questions should be avoided. Here are the main categories:

- Personal Health and Medical Advice: AI should not provide medical diagnoses or treatment recommendations. The risk of misdiagnosis or inappropriate advice can have serious, even life-threatening consequences.

- Legal Guidance: Similar to medical advice, legal counsel requires professional expertise. AI can misinterpret laws or regulations, leading users to make uninformed decisions that could result in legal penalties.

- Sensitive Personal Issues: Questions related to mental health, trauma, or personal crises should be approached with caution. AI may lack the empathy required to handle such delicate matters appropriately.

- Ethnic, Racial, or Religious Discrimination: Engaging in discussions that perpetuate stereotypes or discriminatory views can harm marginalized communities and further social divides.

- Violence and Self-harm: Inquiries related to self-harm, suicide, or violence should be strictly avoided. While AI can provide general support resources, it should not engage in discussions that could encourage harmful behavior.

Why These Questions Are Off-Limits

Understanding the rationale behind avoiding certain questions is essential for responsible AI use. Here are some key reasons:

1. Lack of Expertise

AI systems like ChatGPT are trained on vast datasets, but they do not possess the specialized knowledge or understanding that a trained professional has. For instance, while AI may know medical terminology, it cannot accurately assess an individual’s health condition. This lack of expertise can lead to misguided advice and potentially harmful outcomes.

2. Ethical Responsibility

Developers and users of AI share an ethical responsibility to mitigate harm. By avoiding sensitive topics, we protect users from misinformation and emotional distress. Ethical responsibility extends beyond legal concerns; it encompasses the moral implications of the technology we create and use.

3. Emotional Limitations

AI lacks the ability to empathize, which is crucial when discussing sensitive topics. Conversations about mental health or personal crises require a level of compassion and understanding that AI simply cannot provide. Therefore, directing users towards qualified professionals is a more responsible approach.

How to Encourage Ethical AI Use

Encouraging ethical AI use involves a collaborative effort among developers, users, and policymakers. Here are some strategies to foster a responsible AI environment:

- Implement Clear Guidelines: Developers should establish clear ethical guidelines for AI interactions. These guidelines should outline what types of questions are inappropriate and set boundaries for acceptable use.

- User Education: Educating users about the limitations of AI is crucial. Users should understand that while AI can provide information, it is not a substitute for professional advice.

- Regular Audits of AI Responses: Continuous monitoring and evaluation of AI responses can help identify and rectify inappropriate or harmful output. This process should involve feedback from both users and experts in relevant fields.

- Encourage Reporting Mechanisms: Providing users with a way to report problematic responses can help improve AI systems. Feedback from users is invaluable in refining AI interactions and ensuring ethical standards are met.

The Role of Developers in Ethical AI

Developers play a critical role in ensuring that AI systems operate within ethical boundaries. Here are some responsibilities they bear:

1. Designing for Safety

Incorporating safety features into AI design is paramount. Developers should implement algorithms that detect and flag sensitive inquiries, guiding users away from potentially harmful topics.

2. Fostering Transparency

Transparency about how AI systems work can build trust with users. When people understand the limitations and capabilities of AI, they are less likely to engage in risky inquiries.

3. Prioritizing User Well-being

Developers should prioritize user well-being in AI design. This means ensuring that interactions are supportive and constructive, steering clear of topics that could lead to harm or distress.

Conclusion

Navigating the ethical boundaries of AI like ChatGPT is a complex yet essential task. By recognizing which questions should remain off-limits, we can foster a safer and more responsible AI environment. With the rapid evolution of technology, our commitment to ethical considerations must evolve as well. As users and developers, our collective responsibility is to ensure that AI serves as a force for good, enhancing lives without crossing ethical lines. In doing so, we not only protect individuals but also contribute to a more informed and compassionate society.

See more Future Tech Daily