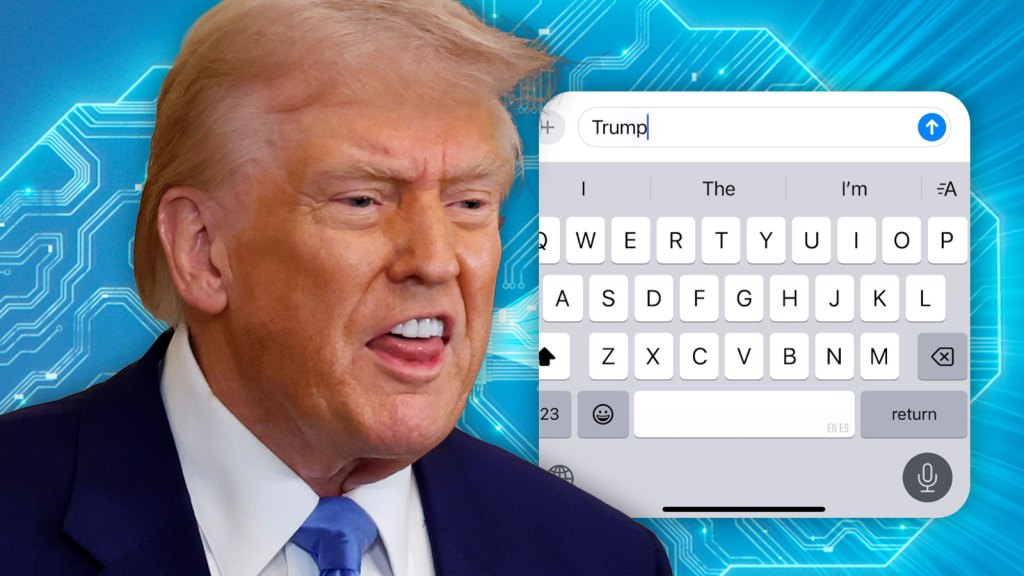

Apple Faces Backlash Over Dictation Error: Mistaking “Racist” for “Trump”

In a world increasingly driven by technology, voice recognition software plays a pivotal role in how we interact with our devices. However, Apple recently found itself at the center of controversy after its dictation feature improperly transcribed the word “racist” as “Trump.” This error has sparked significant backlash, raising pressing questions about the reliability and accuracy of voice recognition technology. As the tech giant works on a fix, many users are left wondering about the implications of such errors and what they mean for the future of dictation services.

The Incident: What Happened?

The uproar began when users reported that Apple’s dictation feature, designed to convert spoken words into text, was incorrectly transcribing the word “racist” to “Trump” in various contexts. This misinterpretation was not just an isolated incident; multiple users across different platforms, including iPhones and iPads, shared their experiences on social media, highlighting how this glitch affected important conversations about race and politics.

Imagine discussing a serious topic involving social justice, only to find that your device has altered your words to mention a political figure instead. Such inaccuracies can undermine the seriousness of conversations and misrepresent the speaker’s intentions. As users pointed out, the implications go beyond mere inconvenience; they touch on issues of trust in technology, particularly in sensitive discussions.

Why Is This a Significant Issue?

At first glance, a simple transcription error might seem trivial. However, in the context of ongoing societal debates about race and political discourse, the stakes are considerably higher. Here are a few reasons why this incident has generated such a strong reaction:

- Impact on Communication: Miscommunication can lead to misunderstandings, misrepresentations, and ultimately, a breakdown in dialogue. When technology plays a role in this, it raises concerns about its reliability.

- Trust in Technology: Users expect technology to be an aid in communication, not a barrier. This incident may lead to diminished trust in Apple’s dictation services, affecting user experience.

- Political Sensitivities: Given the polarized nature of current political climates, any mention of a figure like Donald Trump can evoke strong emotions. Mislabeling serious discussions as political commentary can detract from the original message.

- Broader Implications for AI: This incident underscores the challenges of developing artificial intelligence that truly understands context and nuance in human language.

Apple’s Response: Addressing the Backlash

In light of the backlash, Apple has acknowledged the issue and is reportedly working on a fix. This includes updates to its voice recognition algorithms to enhance accuracy and ensure that such errors are minimized in the future. Apple’s commitment to addressing user concerns reflects its understanding of the importance of maintaining trust in its products.

Moreover, Apple’s proactive approach to solving the problem may reassure users that their feedback is valued and that the company is dedicated to improving the user experience. Updates to dictation software will likely include improved context awareness, allowing the technology to better distinguish between similar-sounding words and phrases.

Exploring the Challenges of Voice Recognition Technology

The incident with Apple’s dictation feature highlights several broader challenges within the field of voice recognition technology:

1. Contextual Understanding

One of the most significant hurdles for voice recognition systems is understanding context. Human language is nuanced, with different meanings based on tone, intonation, and surrounding conversation. Current AI models often struggle with these subtleties, leading to misinterpretations like the one experienced by Apple users.

2. Continuous Learning

Voice recognition systems rely on vast amounts of data to learn and improve. However, they must also adapt to changing language use and emerging slang or political terminology. This requires ongoing updates and adjustments from developers to ensure that systems remain relevant and accurate.

3. Bias in AI

Another pressing issue is the potential for bias in AI systems. If the training data used to develop voice recognition software is skewed or lacks diversity, the resulting algorithms may reflect those biases. This raises ethical concerns, particularly when technology is used in sensitive contexts.

Restoring User Trust: Moving Forward

As Apple works to rectify the dictation error, restoring user trust will be paramount. Here are some steps the company might consider:

- Transparent Communication: Keeping users informed about updates and changes to the dictation feature will foster a sense of collaboration and understanding.

- User Feedback Mechanism: Implementing a more robust feedback system can help Apple identify issues quickly and ensure that user experiences are continuously improving.

- Enhanced Training Data: By diversifying the data used to train voice recognition algorithms, Apple can work towards minimizing biases and improving accuracy.

The Bigger Picture: Voice Recognition in Our Lives

Voice recognition technology is becoming an integral part of our daily lives, from virtual assistants like Siri to transcription services used in professional settings. As users become more reliant on these tools, the importance of accuracy and reliability cannot be overstated. Companies like Apple must prioritize these factors to ensure their products remain valuable to consumers.

In conclusion, the backlash over Apple’s dictation error serves as a reminder of the complexities of language and technology. As the company addresses the issue, it has an opportunity to demonstrate its commitment to user experience and technological advancement. By focusing on improving the accuracy of dictation services, Apple can restore user trust and reinforce its position as a leader in the tech industry.

See more Future Tech Daily